diff --git a/public/guides/asymptotic-notation.png b/public/guides/asymptotic-notation.png

deleted file mode 100644

index 827d5ba96..000000000

Binary files a/public/guides/asymptotic-notation.png and /dev/null differ

diff --git a/public/guides/avoid-render-blocking-javascript-with-async-defer.png b/public/guides/avoid-render-blocking-javascript-with-async-defer.png

deleted file mode 100644

index e489d93b4..000000000

Binary files a/public/guides/avoid-render-blocking-javascript-with-async-defer.png and /dev/null differ

diff --git a/public/guides/backend-languages/back-vs-front.png b/public/guides/backend-languages/back-vs-front.png

deleted file mode 100644

index aa0746d02..000000000

Binary files a/public/guides/backend-languages/back-vs-front.png and /dev/null differ

diff --git a/public/guides/backend-languages/backend-roadmap-part.png b/public/guides/backend-languages/backend-roadmap-part.png

deleted file mode 100644

index 425ba4d14..000000000

Binary files a/public/guides/backend-languages/backend-roadmap-part.png and /dev/null differ

diff --git a/public/guides/backend-languages/javascript-interest.png b/public/guides/backend-languages/javascript-interest.png

deleted file mode 100644

index 79efa69de..000000000

Binary files a/public/guides/backend-languages/javascript-interest.png and /dev/null differ

diff --git a/public/guides/backend-languages/pypl-go-index.png b/public/guides/backend-languages/pypl-go-index.png

deleted file mode 100644

index 7c6fd1c31..000000000

Binary files a/public/guides/backend-languages/pypl-go-index.png and /dev/null differ

diff --git a/public/guides/bash-vs-shell.jpeg b/public/guides/bash-vs-shell.jpeg

deleted file mode 100644

index 6f7c01f06..000000000

Binary files a/public/guides/bash-vs-shell.jpeg and /dev/null differ

diff --git a/public/guides/basic-authentication.png b/public/guides/basic-authentication.png

deleted file mode 100644

index 60fd1c53a..000000000

Binary files a/public/guides/basic-authentication.png and /dev/null differ

diff --git a/public/guides/basic-authentication/chrome-basic-auth.png b/public/guides/basic-authentication/chrome-basic-auth.png

deleted file mode 100644

index 0ada97354..000000000

Binary files a/public/guides/basic-authentication/chrome-basic-auth.png and /dev/null differ

diff --git a/public/guides/basic-authentication/safari-basic-auth.png b/public/guides/basic-authentication/safari-basic-auth.png

deleted file mode 100644

index 81d90d76b..000000000

Binary files a/public/guides/basic-authentication/safari-basic-auth.png and /dev/null differ

diff --git a/public/guides/big-o-notation.png b/public/guides/big-o-notation.png

deleted file mode 100644

index b78f0db27..000000000

Binary files a/public/guides/big-o-notation.png and /dev/null differ

diff --git a/public/guides/character-encodings.png b/public/guides/character-encodings.png

deleted file mode 100644

index 12008c7ca..000000000

Binary files a/public/guides/character-encodings.png and /dev/null differ

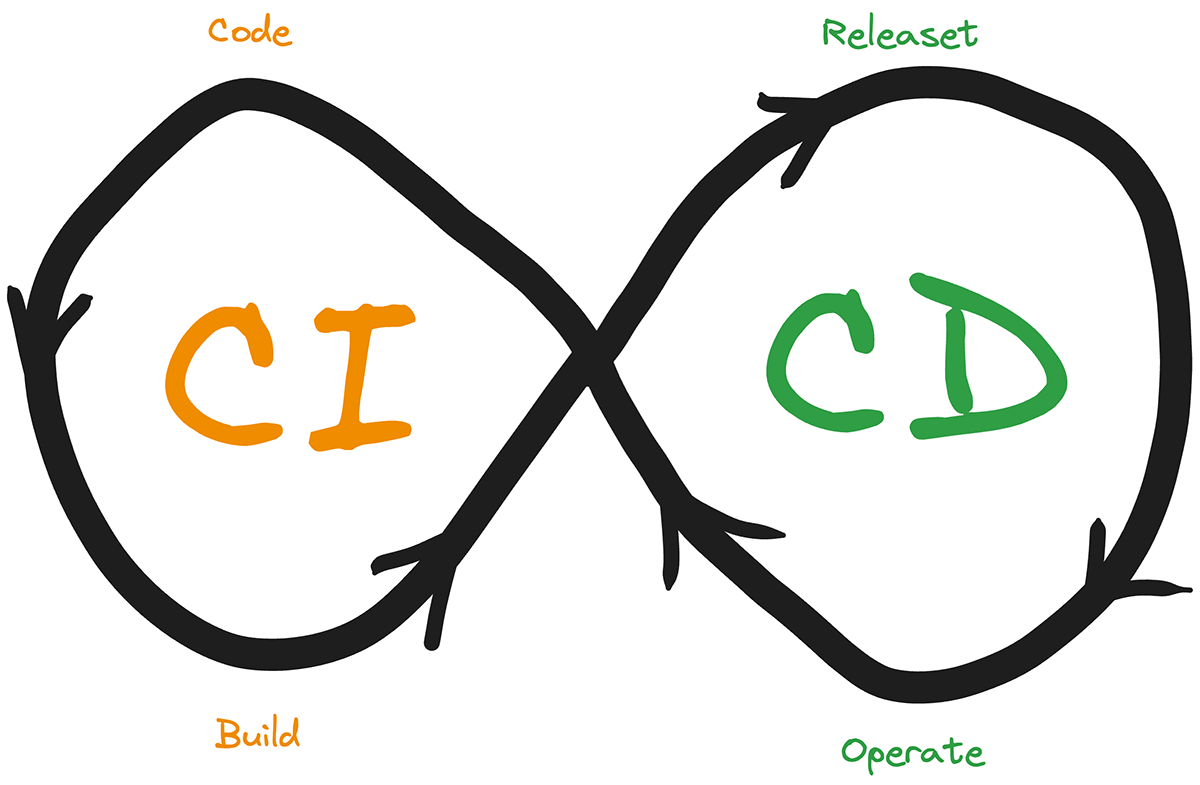

diff --git a/public/guides/ci-cd.png b/public/guides/ci-cd.png

deleted file mode 100644

index 1d3ad42c7..000000000

Binary files a/public/guides/ci-cd.png and /dev/null differ

diff --git a/public/guides/dhcp.png b/public/guides/dhcp.png

deleted file mode 100644

index 4e2f19837..000000000

Binary files a/public/guides/dhcp.png and /dev/null differ

diff --git a/public/guides/jwt-authentication.png b/public/guides/jwt-authentication.png

deleted file mode 100644

index 931fe709a..000000000

Binary files a/public/guides/jwt-authentication.png and /dev/null differ

diff --git a/public/guides/llms.png b/public/guides/llms.png

deleted file mode 100644

index c1445185a..000000000

Binary files a/public/guides/llms.png and /dev/null differ

diff --git a/public/guides/oauth.png b/public/guides/oauth.png

deleted file mode 100644

index d6f1cdb8a..000000000

Binary files a/public/guides/oauth.png and /dev/null differ

diff --git a/public/guides/project-history.png b/public/guides/project-history.png

deleted file mode 100644

index f116d03f9..000000000

Binary files a/public/guides/project-history.png and /dev/null differ

diff --git a/public/guides/proxy/forward-proxy.png b/public/guides/proxy/forward-proxy.png

deleted file mode 100644

index 572640f45..000000000

Binary files a/public/guides/proxy/forward-proxy.png and /dev/null differ

diff --git a/public/guides/proxy/proxy-example.png b/public/guides/proxy/proxy-example.png

deleted file mode 100644

index 058cd7c4d..000000000

Binary files a/public/guides/proxy/proxy-example.png and /dev/null differ

diff --git a/public/guides/proxy/reverse-proxy.png b/public/guides/proxy/reverse-proxy.png

deleted file mode 100644

index a9296a371..000000000

Binary files a/public/guides/proxy/reverse-proxy.png and /dev/null differ

diff --git a/public/guides/random-numbers.png b/public/guides/random-numbers.png

deleted file mode 100644

index 9306f3b95..000000000

Binary files a/public/guides/random-numbers.png and /dev/null differ

diff --git a/public/guides/scaling-databases.svg b/public/guides/scaling-databases.svg

deleted file mode 100644

index 855105dc9..000000000

--- a/public/guides/scaling-databases.svg

+++ /dev/null

@@ -1,87 +0,0 @@

-

diff --git a/public/guides/session-authentication.png b/public/guides/session-authentication.png

deleted file mode 100644

index 787bfee07..000000000

Binary files a/public/guides/session-authentication.png and /dev/null differ

diff --git a/public/guides/sli-slo-sla.jpeg b/public/guides/sli-slo-sla.jpeg

deleted file mode 100644

index 0e95c46ee..000000000

Binary files a/public/guides/sli-slo-sla.jpeg and /dev/null differ

diff --git a/public/guides/ssl-tls-https-ssh.png b/public/guides/ssl-tls-https-ssh.png

deleted file mode 100644

index 3a77c8ae6..000000000

Binary files a/public/guides/ssl-tls-https-ssh.png and /dev/null differ

diff --git a/public/guides/sso.png b/public/guides/sso.png

deleted file mode 100644

index e629aa809..000000000

Binary files a/public/guides/sso.png and /dev/null differ

diff --git a/public/guides/token-authentication.png b/public/guides/token-authentication.png

deleted file mode 100644

index a46b5ddc5..000000000

Binary files a/public/guides/token-authentication.png and /dev/null differ

diff --git a/public/guides/torrent-client/address.png b/public/guides/torrent-client/address.png

deleted file mode 100644

index 0a11fa5fc..000000000

Binary files a/public/guides/torrent-client/address.png and /dev/null differ

diff --git a/public/guides/torrent-client/bitfield.png b/public/guides/torrent-client/bitfield.png

deleted file mode 100644

index cd4384281..000000000

Binary files a/public/guides/torrent-client/bitfield.png and /dev/null differ

diff --git a/public/guides/torrent-client/choke.png b/public/guides/torrent-client/choke.png

deleted file mode 100644

index 3c6d374e9..000000000

Binary files a/public/guides/torrent-client/choke.png and /dev/null differ

diff --git a/public/guides/torrent-client/client-server-p2p.png b/public/guides/torrent-client/client-server-p2p.png

deleted file mode 100644

index a119bff9d..000000000

Binary files a/public/guides/torrent-client/client-server-p2p.png and /dev/null differ

diff --git a/public/guides/torrent-client/download.png b/public/guides/torrent-client/download.png

deleted file mode 100644

index 591d39b27..000000000

Binary files a/public/guides/torrent-client/download.png and /dev/null differ

diff --git a/public/guides/torrent-client/handshake.png b/public/guides/torrent-client/handshake.png

deleted file mode 100644

index 262da0d9a..000000000

Binary files a/public/guides/torrent-client/handshake.png and /dev/null differ

diff --git a/public/guides/torrent-client/info-hash-peer-id.png b/public/guides/torrent-client/info-hash-peer-id.png

deleted file mode 100644

index ad343bde3..000000000

Binary files a/public/guides/torrent-client/info-hash-peer-id.png and /dev/null differ

diff --git a/public/guides/torrent-client/info-hash.png b/public/guides/torrent-client/info-hash.png

deleted file mode 100644

index 1afeda969..000000000

Binary files a/public/guides/torrent-client/info-hash.png and /dev/null differ

diff --git a/public/guides/torrent-client/message.png b/public/guides/torrent-client/message.png

deleted file mode 100644

index b70155a7c..000000000

Binary files a/public/guides/torrent-client/message.png and /dev/null differ

diff --git a/public/guides/torrent-client/pieces.png b/public/guides/torrent-client/pieces.png

deleted file mode 100644

index efd22f321..000000000

Binary files a/public/guides/torrent-client/pieces.png and /dev/null differ

diff --git a/public/guides/torrent-client/pipelining.png b/public/guides/torrent-client/pipelining.png

deleted file mode 100644

index d6e5ebdfc..000000000

Binary files a/public/guides/torrent-client/pipelining.png and /dev/null differ

diff --git a/public/guides/torrent-client/trackers.png b/public/guides/torrent-client/trackers.png

deleted file mode 100644

index 8bbd6a84c..000000000

Binary files a/public/guides/torrent-client/trackers.png and /dev/null differ

diff --git a/public/guides/unfamiliar-codebase.png b/public/guides/unfamiliar-codebase.png

deleted file mode 100644

index c6afdec69..000000000

Binary files a/public/guides/unfamiliar-codebase.png and /dev/null differ

diff --git a/public/guides/web-vitals.png b/public/guides/web-vitals.png

deleted file mode 100644

index 835fad9f5..000000000

Binary files a/public/guides/web-vitals.png and /dev/null differ

diff --git a/src/data/guides/ai-data-scientist-career-path.md b/src/data/guides/ai-data-scientist-career-path.md

deleted file mode 100644

index 74a244d21..000000000

--- a/src/data/guides/ai-data-scientist-career-path.md

+++ /dev/null

@@ -1,198 +0,0 @@

----

-title: 'Is Data Science a Good Career? Advice From a Pro'

-description: 'Is data science a good career choice? Learn from a professional about the benefits, growth potential, and how to thrive in this exciting field.'

-authorId: fernando

-excludedBySlug: '/ai-data-scientist/career-path'

-seo:

- title: 'Is Data Science a Good Career? Advice From a Pro'

- description: 'Is data science a good career choice? Learn from a professional about the benefits, growth potential, and how to thrive in this exciting field.'

- ogImageUrl: 'https://assets.roadmap.sh/guest/is-data-science-a-good-career-10j3j.jpg'

-isNew: false

-type: 'textual'

-date: 2025-01-28

-sitemap:

- priority: 0.7

- changefreq: 'weekly'

-tags:

- - 'guide'

- - 'textual-guide'

- - 'guide-sitemap'

----

-

-

-

-Data science is one of the most talked-about career paths today, but is it the right fit for you?

-

-With [data science](https://roadmap.sh/ai-data-scientist) at the intersection of technology, creativity, and impact, it can be a very appealing role. It definitely promises high and competitive salaries, and the chance to solve real-world problems. Who would say “no” to that?\!

-

-But is it the right fit for your skills and aspirations?

-

-In this guide, I’ll help you uncover the answer to that question by understanding the pros and cons of working as a data scientist. I’ll also look at what the data scientists’ salaries are like and the type of skills you’d need to have to succeed at the job.

-

-Now sit down, relax, and read carefully, because I’m about to help you answer the question of “Is data science a good career for me?”.

-

-## Pros of a Career in Data Science

-

-

-

-There are plenty of “pros” when it comes to picking data science as your career, but let’s take a closer look at the main ones.

-

-### High Demand and Job Security

-

-The demand for data scientists has grown exponentially over the past few years and shows no signs of slowing down. According to the [U.S. Bureau of Labor Statistics](https://www.bls.gov/ooh/math/data-scientists.htm), the data science job market is projected to grow by 36% from 2023 to 2033, far outpacing the average for other fields.

-

-This surge is partly due to the “explosion” of artificial intelligence, particularly tools like ChatGPT, in recent years, which have amplified the need for skilled data scientists to handle complex machine learning models and big data analysis.

-

-### Competitive Salaries

-

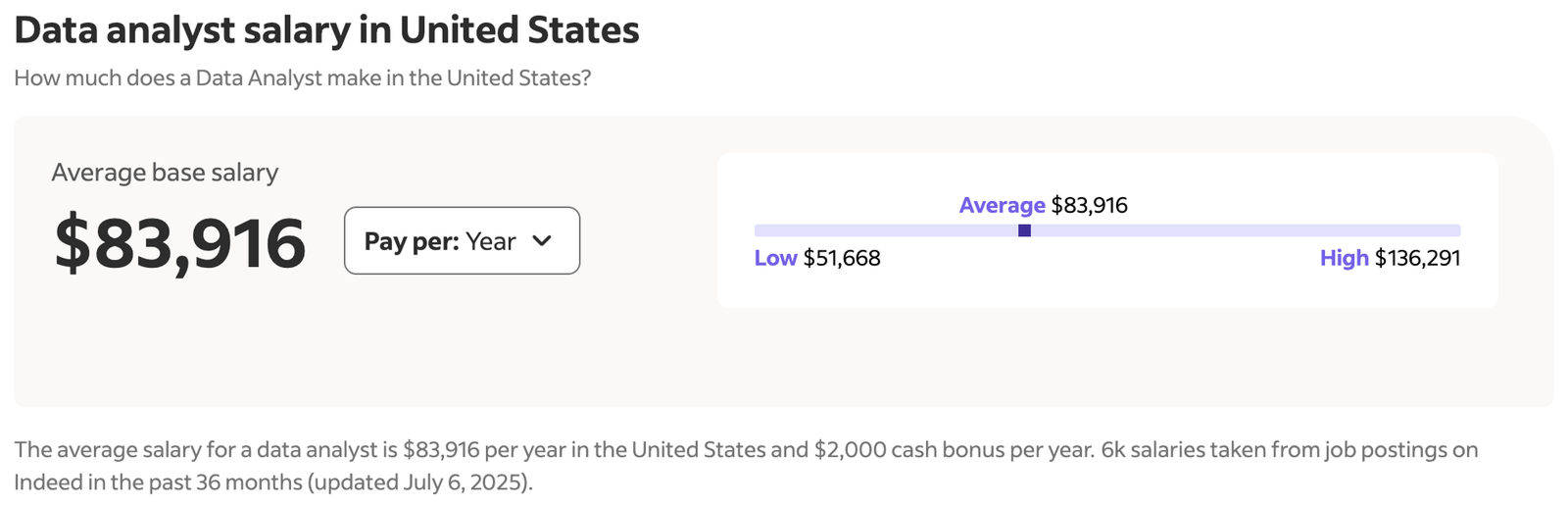

-One of the most appealing aspects of data science positions is the average data scientist’s salary. Reports from Glassdoor and Indeed highlight that data scientists are among the highest-paid professionals in the technology sector. For example, the national average salary for a data scientist in the United States is approximately $120,000 annually, with experienced professionals earning significantly more.

-

-These salaries are a reflection of the reality: the high demand for [data science skills](https://roadmap.sh/ai-data-scientist/skills) and the technical expertise required for these roles are not easy to come by. What’s even more, companies in high-cost regions, such as Silicon Valley, New York City, and Seattle, tend to offer premium salaries to attract top talent.

-

-The financial rewards in this field are usually complemented by additional benefits such as opportunities for professional development like research, publishing, patent registration, etc.

-

-### Intellectual Challenge and Learning Opportunities

-

-Data scientists work in a field that demands continuous learning and adaptation to emerging technologies. Their field is rooted in solving complex problems through a combination of technical knowledge, creativity, and critical thinking. In other words, they rarely have any time to get bored.

-

-What makes data science important and intellectually rewarding, is its ability to address real-world problems. Whether it's optimizing healthcare systems, enhancing customer experiences in retail, or predicting financial risks, data science applications have a tangible impact on people.

-

-This makes data science a good career for individuals who are passionate about lifelong learning and intellectual stimulation.

-

-### Versatility

-

-Data science is a good career choice for those who enjoy variety and flexibility. One of the unique aspects of a career in data science is its ability to reach across various industries and domains (I’m talking technology, healthcare, finance, e-commerce, and even entertainment to name a few). This means data scientists can apply their data science skills in almost any sector that generates or relies on data—which is virtually all industries today.

-

-## Cons of a Career in Data Science

-

-

-

-The data science job is not without its “cons”, after all, there is no “perfect” role out there. Let’s now review some of the potential challenges that come with the role.

-

-### Steep Learning Curve

-

-The steep learning curve in data science is one of the field’s defining characteristics. New data scientists have to develop a deep understanding of technical skills, including proficiency in programming languages like Python, R, and SQL, as well as tools for machine learning and data visualization.

-

-On top of the already complex subjects to master, data scientists need to find ways of staying current with the constant advancements in the field. This is not optional; it’s a necessity for anyone trying to achieve long-term success in data science. This constant evolution can feel overwhelming, especially for newcomers who are also learning foundational skills.

-

-Despite these challenges, the steep learning curve can be incredibly rewarding for those who are passionate about solving problems, making data-driven decisions, and contributing to impactful projects.

-

-While it might sound harsh, it’s important to note that the dedication required to overcome these challenges often results in a fulfilling and (extremely) lucrative career in the world of data science.

-

-### High Expectations

-

-Data science positions come with high expectations from organizations. Data scientists usually have the huge responsibility of delivering actionable insights and ensuring these insights are both accurate and timely.

-

-One of the key challenges data science professionals face is managing the pressure to deliver results under tight deadlines (they’re always tight). Stakeholders often expect instant answers to complex problems, which can lead to unrealistic demands.

-

-To succeed in such environments, skilled data scientists need strong communication skills to explain their findings and set realistic expectations with stakeholders.

-

-### Potential Burnout

-

-The high demand for data science skills usually translates into heavy workloads and tight deadlines, particularly for data scientists working on high-stakes projects (working extra hours is also not an uncommon scenario).

-

-Data scientists frequently juggle multiple complex responsibilities, such as modeling data, developing machine learning algorithms, and conducting statistical analysis—often within limited timeframes.

-

-The intense focus required for these tasks, combined with overlapping priorities (and a small dose of poor project management), can lead to mental fatigue and stress.

-

-Work-life balance can also be a challenge for data scientists giving them another reason for burnout. Combine that with highly active industries, like finance and you have a very hard-to-balance combination.

-

-To mitigate the risk of burnout, data scientists can try to prioritize setting boundaries, managing workloads effectively (when that’s an option), and advocating for clearer role definitions (better separation of concerns).

-

-## Skills Required for a Data Science Career

-

-

-

-To develop a successful career in data science, not all of your [skills](https://roadmap.sh/ai-data-scientist/skills) need to be technical, you also have to look at soft skills, and domain knowledge and to have a mentality of lifelong learning.

-

-Let’s take a closer look.

-

-### Technical Skills

-

-The field of data requires strong foundational technical skills. At the core of these skills is proficiency in programming languages such as Python, R, and SQL. Python is particularly useful and liked for its versatility and extensive libraries, while SQL is essential for querying and managing database systems. R remains a popular choice for statistical analysis and data visualization.

-

-In terms of frameworks, look into TensorFlow, PyTorch, or Scikit-learn. They’re all crucial for building predictive models and implementing artificial intelligence solutions. Tools like Tableau, Power BI, and Matplotlib are fantastic for creating clear and effective data visualizations, which play a significant role in presenting actionable insights.

-

-### Soft Skills

-

-As I said before, it’s not all about technical skills. Data scientists must develop their soft skills, this is key in the field.

-

-From problem-solving and analytical thinking to developing your communication skills and your ability to collaborate with others. They all work together to help you communicate complex insights and results to other, non-technical stakeholders, which is going to be a key activity in your day-to-day life.

-

-### Domain Knowledge

-

-While technical and soft skills are essential, domain knowledge often distinguishes exceptional data scientists from the rest. Understanding industry-specific contexts—such as healthcare regulations, financial market trends, or retail customer behavior—enables data scientists to deliver tailored insights that directly address business needs. If you understand your problem space, you understand the needs of your client and the data you’re dealing with.

-

-Getting that domain knowledge often involves on-the-job experience, targeted research, or additional certifications.

-

-### Lifelong Learning

-

-Finally, if you’re going to be a data scientist, you’ll need to embrace a mindset of continuous learning. The field evolves rapidly, with emerging technologies, tools, and methodologies reshaping best practices. Staying competitive requires consistent professional development through online courses, certifications, conferences, and engagement with the broader data science community.

-

-Lifelong learning is not just a necessity but also an opportunity to remain excited and engaged in a dynamic and rewarding career.

-

-## How to determine if data science is right for you?

-

-

-

-How can you tell if you’ll actually enjoy working as a data scientist? Even after reading this far, you might still have some doubts. So in this section, I’m going to look at some ways in which you can validate that you’ll enjoy the job of a data scientist before you go through the process of becoming one.

-

-### Self-Assessment Questions

-

-Figuring out whether data science is the right career path starts with introspection. Ask yourself the following:

-

-* Do you enjoy working with numbers and solving complex problems?

-* Are you comfortable learning and applying programming skills like Python and SQL?

-* Are you excited by the idea of using algorithms to create data-driven insights and actionable recommendations?

-* Are you willing to commit to continuous learning in a fast-evolving field?

-

-Take your time while you think about these questions. You don’t even need a full answer, just try to understand how you feel about the idea of each one. If you don’t feel like saying “yes”, then chances are, this might not be the right path for you (and that’s completely fine\!).

-

-### Start with Small Projects

-

-If self-assessment is not your thing, another great way to explore your interest in data science is to dive into small, manageable projects. Platforms like Kaggle offer competitions and publicly available data sets, allowing you to practice exploratory data analysis, data visualization, and predictive modeling. Working on these projects can help you build a portfolio, develop confidence in your skills, and validate that you effectively like working this way.

-

-Online courses and certifications in data analytics, machine learning, and programming languages provide a structured way to build foundational knowledge. Resources like Coursera, edX, and DataCamp offer beginner-friendly paths to learning data science fundamentals.

-

-### Network and Seek Mentorship

-

-Another great way to understand if you would like to be a data scientist, is to ask other data scientists. It might sound basic, but it’s a very powerful way because you’ll get insights about the field from the source.

-

-Networking, while not easy for everyone, is a key component of entering the data science field. Go to data science meetups, webinars, or conferences to expand your network and stay updated on emerging trends and technologies.

-

-If you’re not into big groups, try seeking mentorship from data scientists already working in the field. This can accelerate your learning curve. Mentors can offer guidance on career planning, project selection, and skill development.

-

-## Alternative career paths to consider

-

-

-

-Not everyone who is interested in data science wants to pursue the full spectrum of technical skills or the specific responsibilities of a data scientist. Lucky for you, there are several related career paths that can still scratch your itch for fun and interesting challenges while working within the data ecosystem.

-

-### Data-Adjacent Roles

-

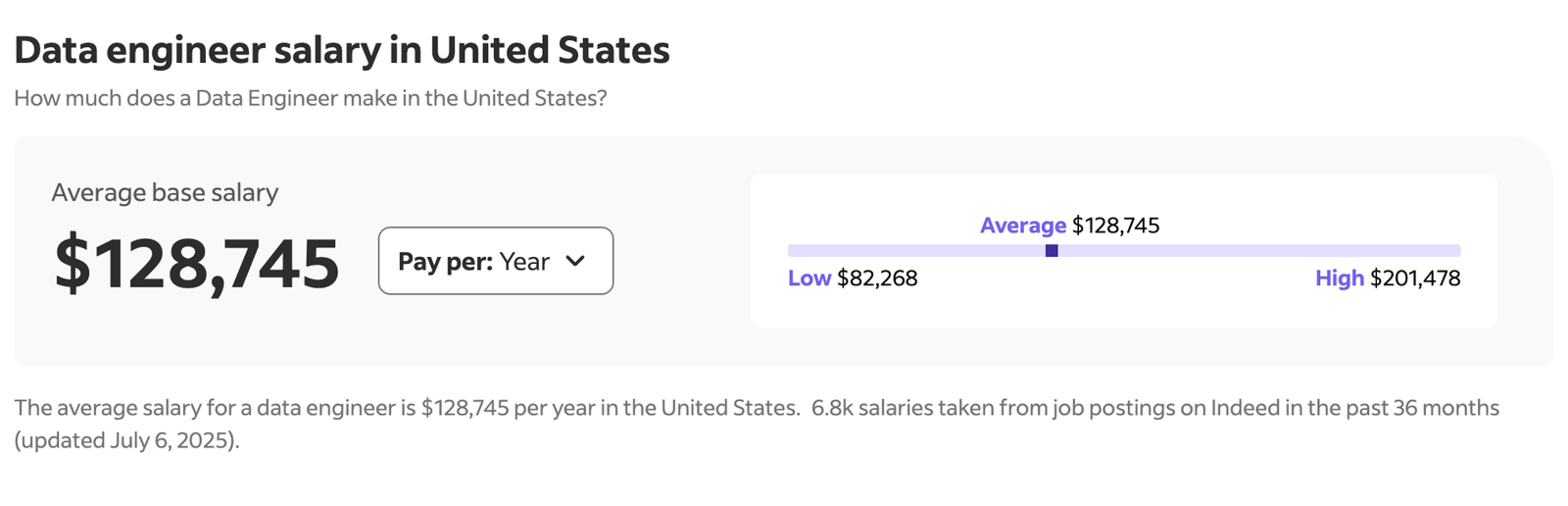

-* **Data Analyst**: If you enjoy working with data but prefer focusing on interpreting and visualizing it to inform business decisions, a data analyst role might be for you. Data analysts primarily work on identifying trends and providing actionable recommendations without diving deeply into machine learning or predictive modeling.

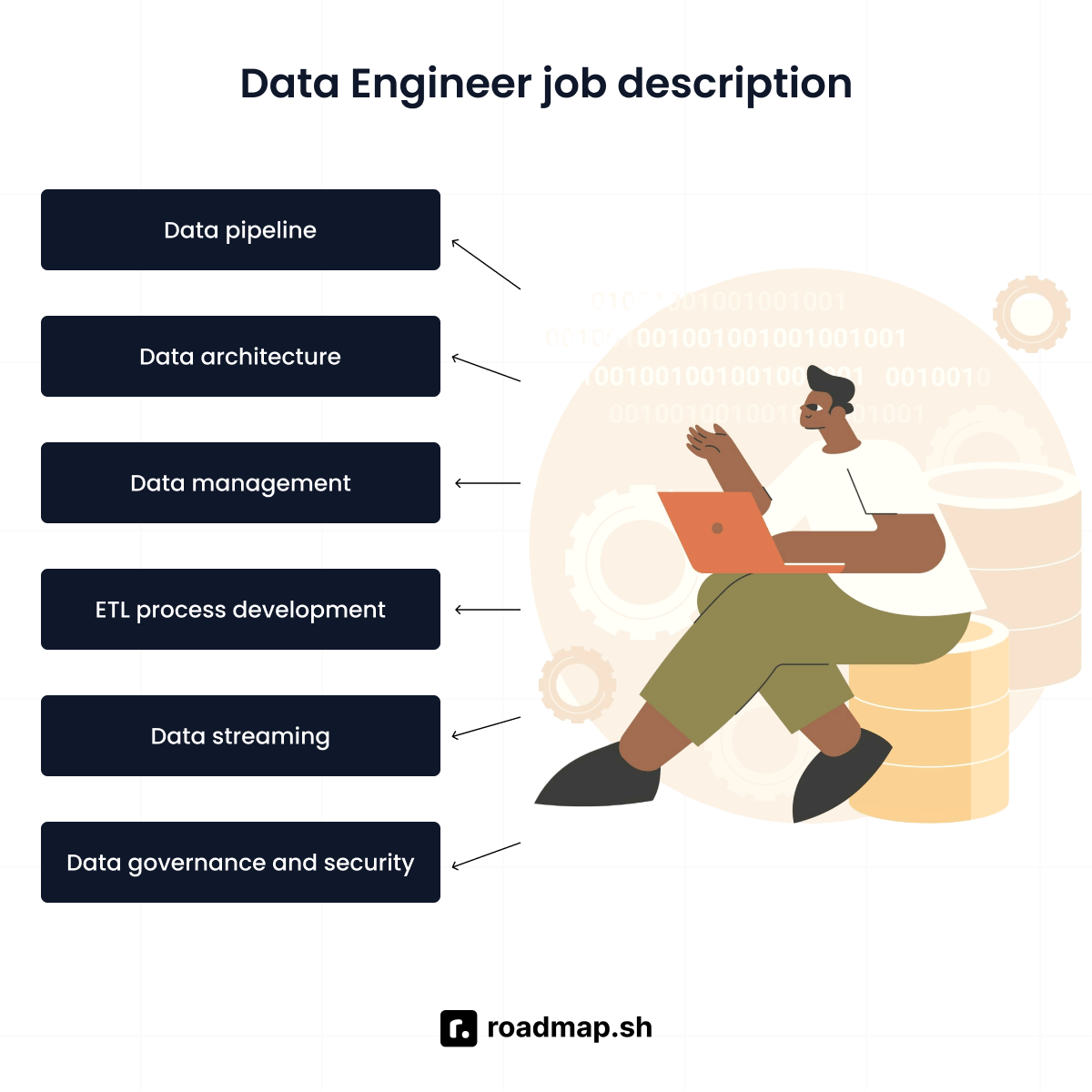

-* **Data Engineer**: If you’re more inclined toward building the infrastructure that makes data science possible, consider becoming a data engineer. These data professionals design, build, and maintain data pipelines, ensuring the accessibility and reliability of large data sets for analysis. The role requires expertise in database systems, data structures, and programming.

-

-### Related Fields

-

-* **Software Engineering**: For those who enjoy coding and software development but want to remain close to data-related projects, software engineering offers opportunities to build tools, applications, and systems that support data analysis and visualization.

-* **Cybersecurity**: With the increasing emphasis on data privacy and security, cybersecurity professionals play a critical role in protecting sensitive information. This field combines technical knowledge with policy enforcement, making it appealing to those interested in data protection and regulatory compliance.

-

-### Non-Technical Roles in the Data Ecosystem

-

-* **Data Governance**: If instead of transforming data and getting insights, you’d like to focus more on how the data is governed (accessed, controlled, cataloged, etc), then this might be the role for you. This role is essential for ensuring that an organization’s data assets are used effectively and responsibly.

-* **Data Privacy Office**: In a similar vein to a data governance officer, the data privacy officer cares for the actual privacy of the data. With the rise of AI, data is more relevant than ever, and controlling that you comply with regulations like GDPR and CCPA, is critical for organizations. This role focuses on data privacy strategies, audits, and risk management, making it an excellent fit for those interested in the legal and ethical aspects of data.

-

-## Next steps

-

-

-

-Data science is a promising career path offering high demand, competitive salaries, and multiple opportunities across various industries. Its ability to address real-world problems, combined with the intellectual challenge it presents, makes it an attractive choice for many. However, it also makes it a very difficult and taxing profession for those who don’t enjoy this type of challenge.

-

-There are many potential next steps for you to take and answer the question of “Is data science a good career?”.

-

-For example, you can reflect on your interests and strengths. Ask yourself whether or not you enjoy problem-solving, working with data sets, and learning new technologies. Use this reflection to determine if data science aligns with your career goals.

-

-You can also consume resources like the [AI/Data Scientist roadmap](https://roadmap.sh/ai-data-scientist) and the [Data Analyst roadmap](https://roadmap.sh/data-analyst), as they offer a clear progression for developing essential skills, so check them out. These tools can help you identify which areas to focus on based on your current expertise and interests.

-

-In the end, just remember: data science is rapidly evolving so make sure to stay engaged by reading research papers, following industry blogs, or attending conferences. Anything you can do will help, just figure out what works for you and keep doing it.

diff --git a/src/data/guides/ai-data-scientist-lifecycle.md b/src/data/guides/ai-data-scientist-lifecycle.md

deleted file mode 100644

index 9414ddf32..000000000

--- a/src/data/guides/ai-data-scientist-lifecycle.md

+++ /dev/null

@@ -1,200 +0,0 @@

----

-title: "Data Science Lifecycle 101: A Beginners' Ultimate Guide"

-description: 'Discover the Data Science Lifecycle step-by-step: Learn key phases, tools, and techniques in this beginner-friendly guide.'

-authorId: fernando

-excludedBySlug: '/ai-data-scientist/career-path'

-seo:

- title: "Data Science Lifecycle 101: A Beginners' Ultimate Guide"

- description: 'Discover the Data Science Lifecycle step-by-step: Learn key phases, tools, and techniques in this beginner-friendly guide.'

- ogImageUrl: 'https://assets.roadmap.sh/guest/data-science-lifecycle-eib3s.jpg'

-isNew: false

-type: 'textual'

-date: 2025-01-29

-sitemap:

- priority: 0.7

- changefreq: 'weekly'

-tags:

- - 'guide'

- - 'textual-guide'

- - 'guide-sitemap'

----

-

-

-

-Developing a data science project, from beginning to production is not a trivial task. It involves so many steps and so many complex tasks, that without some guardrails, releasing to production becomes ten times harder.

-

-Here’s where the data science lifecycle comes into play. It brings a structured approach so that [data scientists](https://roadmap.sh/ai-data-scientist), data analysts, and others can move forward together from raw data to actionable insights.

-

-In this guide, we’ll cover everything you need to know about the data science lifecycle, its many variants, and how to pick the right one for your project.

-

-So let’s get going\!

-

-## Core Concepts of a Lifecycle

-

-

-

-To fully understand the concept of the lifecycle, we have to look at the core concepts inside this framework, and how they contribute to the delivery of a successful data science project.

-

-### Problem Definition

-

-Every data science project begins with a clear definition of the problem to be solved. This involves collaborating with key stakeholders to identify objectives and desired outcomes. Data scientists must understand the context and scope of the project to ensure that the goals align with business or research needs.

-

-### Data Collection

-

-In the data collection phase, data scientists and data engineers work together and gather relevant data from diverse data sources. This includes both structured and unstructured data, such as historical records, new data, or data streams.

-

-The process ensures the integration of all pertinent data, creating a robust dataset for the following stages. Data acquisition tools and strategies play a critical role in this phase.

-

-### Data Preparation

-

-This stage addresses the quality of raw data by cleaning and organizing it for analysis. Tasks such as treating inaccurate data, handling missing values, and converting raw data into usable formats are central to this stage. This stage prepares the data for further and more detailed analysis.

-

-### Exploratory Data Analysis (EDA)

-

-The exploratory data analysis stage is where the “data processing” happens. This stage focuses on uncovering patterns, trends, and relationships within the data. Through data visualization techniques such as bar graphs and statistical models, data scientists perform a thorough data analysis and gain insights into the data’s structure and characteristics.

-

-Like every stage so far, this one lays the foundation for the upcoming stages. In this particular case, after performing a detailed EDA, data scientists have a much better understanding of the data they have to work with, and a pretty good idea of what they can do with it now.

-

-### Model Building and Evaluation

-

-The model building phase involves developing predictive or machine learning models tailored to the defined problem. Data scientists experiment with various machine learning algorithms and statistical models to determine the best approach. Here’s where data modeling happens, bridging the insights gained during the exploratory data analysis (EDA) phase with actionable predictions and outcomes used in the deployment phase.

-

-Model evaluation follows, where the performance and accuracy of these models are tested to ensure reliability.

-

-### Deployment and Monitoring

-

-The final stage of this generic data science lifecycle involves deploying the model into a production environment. Here, data scientists, machine learning engineers, and quality assurance teams ensure that the model operates effectively within existing software systems.

-

-After this stage, continuous monitoring and maintenance are essential to address new data or changing conditions, which can impact the performance and accuracy of the model.

-

-## Exploring 6 Popular Lifecycle Variants

-

-

-

-The data science lifecycle offers various frameworks tailored to specific needs and contexts. Below, we explore six prominent variants:

-

-### CRISP-DM (Cross Industry Standard Process for Data Mining)

-

-CRISP-DM is one of the most widely used frameworks in data science projects, especially within business contexts.

-

-It organizes the lifecycle into six stages: Business Understanding, Data Understanding, Data Preparation, Modeling, Evaluation, and Deployment.

-

-This iterative approach allows teams to revisit and refine previous steps as new insights emerge. CRISP-DM is ideal for projects where aligning technical efforts with business goals is very important.

-

-**Example use case**: A retail company wants to improve customer segmentation for targeted marketing campaigns. Using CRISP-DM, the team starts with business understanding to define segmentation goals, gathers transaction and demographic data, prepares and cleans it, builds clustering models, evaluates their performance, and deploys the best model to group customers for personalized offers.

-

-### KDD (Knowledge Discovery in Databases)

-

-The KDD process focuses on extracting useful knowledge from large datasets. Its stages include Selection, Preprocessing, Transformation, Data Mining, and Interpretation/Evaluation.

-

-KDD emphasizes the academic and research-oriented aspects of data science, making it an ideal choice for experimental or exploratory projects in scientific domains. It offers a systematic approach to discovering patterns and insights in complex datasets.

-

-**Example use case:** A research institute analyzes satellite data to study climate patterns. They follow KDD by selecting relevant datasets, preprocessing to remove noise, transforming data to highlight seasonal trends, applying data mining techniques to identify long-term climate changes, and interpreting results to publish findings.

-

-### Data Analytics Lifecycle

-

-This specific data science lifecycle is tailored for enterprise-level projects that prioritize actionable insights. It’s composed of six stages: Discovery, Data Preparation, Model Planning, Model Building, Communicating Results, and Operationalizing.

-

-The framework’s strengths lie in its alignment with business objectives and readiness for model deployment, making it ideal for organizations seeking to integrate data-driven solutions into their operations.

-

-**Example use case:** A financial institution uses the Data Analytics Lifecycle to detect fraudulent transactions. They discover patterns in historical transaction data, prepare it by cleaning and normalizing, plan predictive models, build and test them, communicate results to fraud prevention teams, and operationalize the model to monitor real-time transactions.

-

-### SEMMA (Sample, Explore, Modify, Model, Assess)

-

-SEMMA is a straightforward and tool-centric framework developed by SAS. It focuses on sampling data, exploring it for patterns, modifying it for analysis, modeling it for predictions, and assessing the outcomes.

-

-This lifecycle is particularly useful for workflows involving specific analytics tools. Its simplicity and strong emphasis on data exploration make it an excellent choice for teams prioritizing rapid insights.

-

-**Example use case:** A healthcare organization predicts patient readmission rates using SEMMA. They sample data from hospital records, explore patient histories for trends, modify features like patient age and diagnoses, build machine learning models, and assess their accuracy to choose the most effective predictor.

-

-### Team Data Science Process (TDSP)

-

-TDSP offers a collaborative and agile framework that organizes the lifecycle into four key stages: Business Understanding, Data Acquisition, Modeling, and Deployment.

-

-Designed with team-based workflows in mind, TDSP emphasizes iterative progress and adaptability, ensuring that projects align with business needs while remaining flexible to changes. It’s well-suited for scenarios requiring close collaboration among data scientists, engineers, and stakeholders.

-

-**Example use case:** A logistics company improves delivery route optimization. Using TDSP, the team collaborates to understand business goals, acquires data from GPS and traffic systems, develops routing models, and deploys them to dynamically suggest the fastest delivery routes.

-

-### MLOps Lifecycle

-

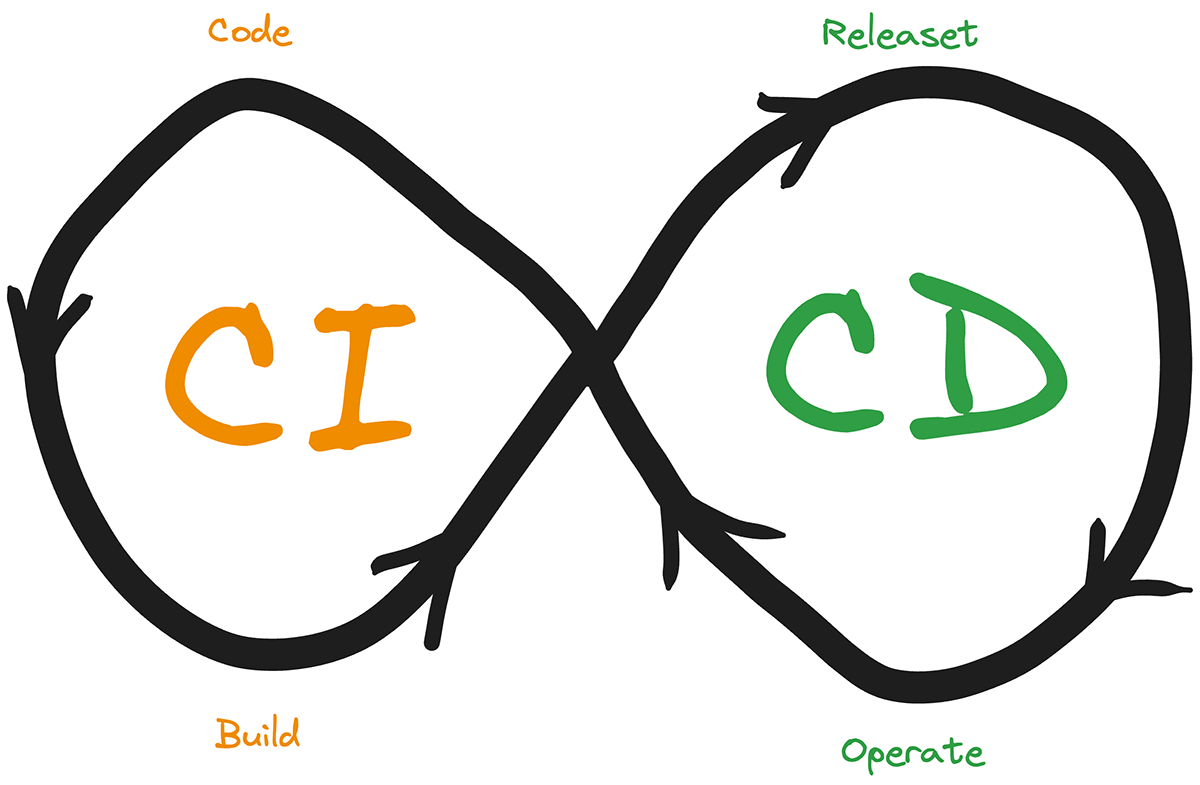

-MLOps focuses specifically on machine learning operations and production environments. Its stages include Data Engineering, Model Development, Model Deployment, and Monitoring.

-

-This lifecycle is essential for projects involving large-scale machine learning systems that demand high scalability and automation.

-

-MLOps integrates seamlessly with continuous integration and delivery pipelines, ensuring that deployed models remain effective and relevant as new data is introduced.

-

-Each of these frameworks has its own strengths and is suited to different types of data science operations.

-

-**Example use case:** An e-commerce platform deploys a recommendation engine using MLOps. They engineer data pipelines from user activity logs, develop collaborative filtering models, deploy them on the website, and monitor their performance to retrain models when new user data is added.

-

-## How to Choose the Right Data Science Lifecycle

-

-

-

-Determining the most suitable data science lifecycle for your data science project requires a systematic approach. After all, not all lifecycles are best suited for all situations.

-

-You can follow these steps to identify the framework that aligns best with your goals and resources:

-

-1. **Define your objectives:** Clearly identify the goals of your project. Are you solving a business problem, conducting academic research, or deploying a machine learning model? Understanding the end objective will narrow down your choices.

-2. **Assess project complexity:** Evaluate the scope and intricacy of your project. Simple projects may benefit from streamlined frameworks like SEMMA, while complex projects with iterative requirements might need CRISP-DM or TDSP.

-3. **Evaluate your team composition:** Consider the expertise within your team. A team with strong machine learning skills may benefit from MLOps, whereas a diverse team with varying levels of experience might prefer a more general framework like CRISP-DM.

-4. **Analyze industry and domain requirements:** Different industries may have unique needs. For example, business-driven projects often align with the Data Analytics Lifecycle, while academic projects might find KDD more suitable.

-5. **Examine available tools and resources:** Ensure that the tools, software, and infrastructure you have access to are compatible with your chosen lifecycle. Frameworks like SEMMA may require specific tools such as SAS.

-6. **Match to key stakeholder needs:** Align the lifecycle with the expectations and requirements of stakeholders. A collaborative framework like TDSP can be ideal for projects needing frequent input and iteration with business partners.

-7. **Run a trial phase:** If possible, test a smaller project or a subset of your current project with the selected framework. This will help you assess its effectiveness and make adjustments as needed.

-

-Follow these steps and you can identify the lifecycle that not only suits your project but also ensures that your data science process is efficient and productive. Each project is unique, so tailoring the lifecycle to its specific demands is critical to success.

-

-## Generic Framework for Beginners

-

-

-

-While there are many different data science lifecycles and ways to tackle data science projects, if you’re just getting started and you’re trying to push your first project into production, relying on a beginner-friendly lifecycle might be a better idea.

-

-A generic framework for beginners in data science simplifies the lifecycle into manageable steps, making it easier to understand and implement. You can follow these steps to define your new framework:

-

-### 1\. Define the problem

-

-

-

-Start by clearly identifying the problem you aim to solve. Consider the objectives and outcomes you want to achieve, and ensure these are aligned with the needs of any stakeholder. This will help focus your efforts during development and set the right expectations with your stakeholders.

-

-### 2\. Collect and clean data

-

-

-

-Gather data from reliable and relevant sources. During this stage, focus on ensuring data quality by treating inaccurate data, filling in missing values, validating and removing potential data biases and finally, converting raw data into usable formats.

-

-### 3\. Analyze and visualize

-

-

-

-Explore the data to uncover patterns, trends, and insights. Use simple data visualization techniques such as bar graphs and scatter plots, along with basic statistical methods, to gain a deeper understanding of the dataset’s structure and variables.

-

-### 4\. Build and evaluate a model

-

-

-

-Develop a basic predictive model using accessible machine learning or statistical tools. Test the model’s performance to ensure it meets the objectives defined earlier during step 1\. For beginners, tools with user-friendly interfaces like Python libraries or Excel can be highly effective.

-

-### 5\. Share results and deploy

-

-

-

-Present your findings to stakeholders in a clear and actionable format. If applicable, deploy the model into a small-scale production environment to observe its impact and gather feedback for further improvement.

-

-**Tips for small projects:** Start with a problem you’re familiar with, such as analyzing personal expenses or predicting simple outcomes. Focus on learning the process rather than achieving perfect results. Use open-source tools and resources to experiment and build your confidence.

-

-Use this framework if this is your first data science project, evaluate your results, and most importantly, reflect on your experience.

-

-Take those insights into your next project and decide if for that one you would actually benefit from using one of the predefined standard lifecycles mentioned above.

-

-## Conclusion

-

-The data science lifecycle is a cornerstone of modern data science. By understanding its stages and principles, professionals can navigate the complexities of data science projects with confidence.

-

-Regardless of what you’re doing, dealing with unstructured data, creating models, or deploying machine learning algorithms, the lifecycle provides a roadmap for success.

-

-As data science experts and teams continue to explore and refine their approaches, the lifecycle framework remains a key tool for achieving excellence in any and all operations.

-

-Finally, remember that if you’re interested in developing your data science career, you have our [data scientist](https://roadmap.sh/ai-data-scientist) and [data analyst](https://roadmap.sh/data-analyst) roadmaps at your disposal. These roadmaps will help you focus your learning time on the really important and relevant topics.

\ No newline at end of file

diff --git a/src/data/guides/ai-data-scientist-skills.md b/src/data/guides/ai-data-scientist-skills.md

deleted file mode 100644

index fd25ce060..000000000

--- a/src/data/guides/ai-data-scientist-skills.md

+++ /dev/null

@@ -1,197 +0,0 @@

----

-title: 'Top 11 Data Science Skills to Master in @currentYear@'

-description: 'Looking to excel in data science? Learn the must-have skills for @currentYear@ with our expert guide and advance your data science career.'

-authorId: fernando

-excludedBySlug: '/ai-data-scientist/skills'

-seo:

- title: 'Top 11 Data Science Skills to Master in @currentYear@'

- description: 'Looking to excel in data science? Learn the must-have skills for @currentYear@ with our expert guide and advance your data science career.'

- ogImageUrl: 'https://assets.roadmap.sh/guest/data-science-skills-to-master-q36qn.jpg'

-isNew: false

-type: 'textual'

-date: 2025-01-28

-sitemap:

- priority: 0.7

- changefreq: 'weekly'

-tags:

- - 'guide'

- - 'textual-guide'

- - 'guide-sitemap'

----

-

-

-

-Data science is becoming more relevant as a field and profession by the day. Part of this constant change is the mind-blowing speed at which AI is evolving these days. Every day a new model is released, every week a new product is built around it, and every month OpenAI releases an earth-shattering change that pushes the field even further than before.

-

-Data scientists sit at the core of that progress, but what does it take to master the profession?

-

-Mastering the essential data scientist skills goes beyond just solving complex problems. It includes the ability to handle data workflows, build machine learning models, and interpret data trends effectively.

-

-In this guide, we'll explore the top 10 skills that future data scientists must work on to shine brighter than the rest in 2025, setting a foundation for long-term success.

-

-These are the data scientist skills covered in the article:

-

-* Programming proficiency with **Python, R, and SQL**

-* Data manipulation and analysis, including **data wrangling** and **exploratory data analysis**

-* Mastery of **machine learning** and **AI techniques**

-* Strong statistical and **mathematical** **foundations**

-* Familiarity with **big data technologies**

-* Data engineering for infrastructure and **ETL pipelines**

-* Expertise in **data visualization** with tools like **Plotly** and **D3.js**

-* **Domain knowledge** for aligning data science projects with business goals

-* **Soft skills** for communication, collaboration, and creativity

-* **Feature engineering** and selection for **model optimization**.

-* Staying current with trends like **MLOps** and **Generative AI.**

-

-## **Understanding Data Science**

-

-[Data science](https://roadmap.sh/ai-data-scientist) is an interdisciplinary field that combines multiple disciplines to make sense of data and drive actionable insights. It integrates programming, statistical analysis, and domain knowledge to uncover patterns and trends in both structured and unstructured data. This powerful combination enables data professionals to solve a variety of challenges, such as:

-

-* Building predictive models to forecast sales or identify customer churn.

-* Developing optimization techniques to streamline supply chains or allocate resources more effectively.

-* Leveraging automation and artificial intelligence to create personalized recommendations or detect fraudulent activity in massive datasets.

-

-At its core, data science empowers organizations to turn raw data into actionable insights. By interpreting data effectively and applying statistical models, data scientists support data-driven decision-making, ensuring businesses maintain a competitive edge.

-

-The data science field requires a unique mix of technical skills, analytical prowess, and creativity to handle the vast array of complex data sets encountered in real-world scenarios. In other words, being a data scientist is not for everyone.

-

-**1\. Programming Proficiency**

-

-

-

-Programming remains a cornerstone of the data science field, forming the foundation for nearly every task in data science projects. Mastery of programming languages like Python, R, and SQL is crucial for aspiring data scientists to handle data workflows effectively.

-

-Python is the undisputed leader in data science, thanks to its extensive libraries and frameworks. Pandas, NumPy, and Scikit-learn are essential for tasks ranging from data wrangling and numerical analysis to building machine learning models. Deep learning tools such as TensorFlow and PyTorch make Python indispensable for tackling advanced challenges like developing artificial neural networks for image recognition and natural language processing (NLP).

-

-R excels in statistical analysis and visualization. Its specialized libraries, like ggplot2 for data visualization and caret for machine learning models, make it a preferred choice for academics and data analysis tasks that require interpreting data trends and creating statistical models.

-

-SQL is the backbone of database management, which is essential for extracting, querying, and preparing data from structured databases. A strong command of SQL allows data professionals to manage massive datasets efficiently and ensure smooth integration with analytical tools.

-

-## **2\. Data Manipulation and Analysis**

-

-

-

-The ability to manipulate and analyze data lies at the heart of data science skills. These tasks involve transforming raw data into a format suitable for analysis and extracting insights through statistical concepts and exploratory data analysis (EDA).

-

-Data wrangling is a critical skill for cleaning and preparing raw data, addressing missing values, and reshaping complex data sets. For example, consider a dataset containing customer transaction records with incomplete information. Using tools like Pandas in Python, a data scientist can identify missing values, impute or drop them as appropriate, and restructure the data to focus on specific variables like transaction frequency or total purchase amounts. This process ensures the dataset is ready for meaningful analysis.

-

-Tools like Pandas, PySpark, and Dask are invaluable for handling unstructured data or working with massive datasets efficiently. These tools allow data scientists to transform complex data sets into manageable and analyzable forms, which is foundational for building machine learning models or conducting advanced statistical analysis.

-

-Performing exploratory data analysis allows data scientists to identify patterns, correlations, and anomalies within structured data. Visualization libraries like Matplotlib and Seaborn, combined with Python scripts, play a significant role in understanding data insights before building predictive models or statistical models.

-

-**3\. Machine Learning and AI**

-

-

-

-Machine learning is a driving force in the data science industry, enabling data-driven decisions across sectors and revolutionizing how organizations interpret data and make predictions. Mastering machine learning algorithms and frameworks are among the top data science skills for aspiring data scientists who wish to excel in analyzing data and creating impactful solutions.

-

-Data scientists commonly tackle supervised learning tasks, such as predicting housing prices through regression models or identifying fraudulent transactions with classification algorithms. For example, using Scikit-learn, a data scientist can train a decision tree to categorize customer complaints into predefined categories for better issue resolution. Additionally, unsupervised techniques like clustering are applied in market segmentation to group customers based on purchasing patterns, helping businesses make data-driven decisions.

-

-Deep learning represents the cutting edge of artificial intelligence, utilizing artificial neural networks to manage unstructured data and solve highly complex problems. Frameworks like TensorFlow and PyTorch are essential tools for developing advanced solutions, such as NLP models for chatbot interactions or generative AI for creating realistic images. These tools empower data scientists to push the boundaries of innovation and unlock actionable insights from vast and complex datasets.

-

-## **4\. Statistical and Mathematical Foundations**

-

-

-

-Statistical concepts and mathematical skills form the backbone of building robust data models and interpreting data insights. These foundational skills are indispensable for anyone aiming to succeed in the data science field.

-

-Probability theory and hypothesis testing play a vital role in understanding uncertainty in data workflows. For instance, a data scientist might use hypothesis testing to evaluate whether a new marketing strategy leads to higher sales compared to the current approach, ensuring data-driven decision-making.

-

-Linear algebra and calculus are crucial for developing and optimizing machine learning algorithms. Techniques like matrix decomposition and gradient descent are used to train neural networks and enhance their predictive accuracy. These mathematical tools are the engine behind many advanced algorithms, making them essential data scientist skills.

-

-Advanced statistical analysis, including A/B testing and Bayesian inference, helps validate predictions and understand relationships within complex datasets. For example, A/B testing can determine which website design yields better user engagement, providing actionable insights to businesses.

-

-## **5\. Big Data Technologies**

-

-

-

-While big data skills are secondary for most data scientists, understanding big data technologies enhances their ability to handle massive datasets efficiently. Familiarity with tools like Apache Spark and Hadoop allows data scientists to process and analyze distributed data, which is especially important for projects involving millions of records. For example, Apache Spark can be used to calculate real-time metrics on user behavior across e-commerce platforms, enabling businesses to personalize experiences dynamically.

-

-Cloud computing skills, including proficiency with platforms like AWS or GCP, are also valuable for deploying machine learning projects at scale. A data scientist working with GCP's BigQuery can query massive datasets in seconds, facilitating faster insights for time-sensitive decisions. These technologies, while not the core of a data scientist's responsibilities, are crucial for ensuring scalability and efficiency in data workflows.

-

-## **6\. Data Engineering**

-

-

-

-Data engineering complements data science by creating the infrastructure required to analyze data effectively. This skill set ensures that data flows seamlessly through pipelines, enabling analysis and decision-making.

-

-Designing ETL (Extract, Transform, Load) pipelines is a critical part of data engineering. For instance, a data engineer might create a pipeline to collect raw sales data from multiple sources, transform it by standardizing formats and handling missing values, and load it into a database for further analysis. These workflows are the backbone of data preparation.

-

-Using tools like Apache Airflow, those workflows can be streamlined, while managing real-time data streaming using Kafka ensures that real-time data—such as social media feeds or IoT sensor data—is processed without delay. For example, a Kafka pipeline could ingest weather data to update forecasts in real-time.

-

-Finally, storing and querying complex data sets in cloud computing with tools like Snowflake or BigQuery allows data scientists to interact with massive datasets effortlessly.

-

-These platforms support scalable storage and high-performance queries, enabling faster analysis and actionable insights.

-

-## **7\. Data Visualization**

-

-

-

-Data visualization is a cornerstone of the data science field, as it enables data professionals to present data and communicate findings effectively. While traditional tools like Tableau and Power BI are widely used, aspiring data scientists should prioritize programming-based tools like Plotly and D3.js for greater flexibility and customization.

-

-For example, using Plotly, a data scientist can create an interactive dashboard to visualize customer purchase trends over time, allowing stakeholders to explore the data dynamically. Similarly, D3.js offers unparalleled control for designing custom visualizations, such as heatmaps or network graphs, that convey complex relationships in a visually compelling manner.

-

-Applying storytelling techniques further enhances the impact of visualizations. By weaving data insights into a narrative, data scientists can ensure their findings resonate with stakeholders and drive actionable decisions. For instance, a well-crafted story supported by visuals can explain how seasonal demand patterns affect inventory management, bridging the gap between technical analysis and strategic planning.

-

-## **8\. Business and Domain Knowledge**

-

-

-

-Domain knowledge enhances the relevance of data science projects by aligning them with organizational goals and addressing unique industry-specific challenges. Understanding the context in which data is applied allows data professionals to make their analysis more impactful and actionable.

-

-For example, in the finance industry, a data scientist with domain expertise can design predictive models that assess credit risk by analyzing complex data sets of customer transactions, income, and past credit behavior. These models enable financial institutions to make data-driven decisions about lending policies.

-

-In healthcare, domain knowledge allows data scientists to interpret medical data effectively, such as identifying trends in patient outcomes based on treatment history. By leveraging data models tailored to clinical needs, data professionals can help improve patient care and operational efficiency in hospitals.

-

-This alignment ensures that insights are not only technically robust but also directly applicable to solving real-world problems, making domain knowledge an indispensable skill for data professionals seeking to maximize their impact.

-

-## **9\. Soft Skills**

-

-

-

-Soft skills are as essential as technical skills in the data science field, bridging the gap between complex data analysis and practical implementation. These skills enhance a data scientist's ability to communicate findings, collaborate with diverse teams, and approach challenges with innovative solutions.

-

-**Communication** is critical for translating data insights into actionable strategies. For example, a data scientist might present the results of an exploratory data analysis to marketing executives, breaking down statistical models into simple, actionable insights that drive campaign strategies. The ability to clearly interpret data ensures that stakeholders understand and trust the findings.

-

-**Collaboration** is equally vital, as data science projects often involve cross-functional teams. For instance, a data scientist might work closely with software engineers to integrate machine learning models into a production environment or partner with domain experts to ensure that data-driven decisions align with business objectives. Effective teamwork ensures seamless data workflows and successful project outcomes.

-

-**Creativity** allows data scientists to find innovative ways to address complex problems. A creative data scientist might devise a novel approach to handling unstructured data, such as using natural language processing (NLP) techniques to extract insights from customer reviews, providing actionable insights that improve product development.

-

-These critical soft skills complement technical expertise, making data professionals indispensable contributors to their organizations.

-

-## 10\. Feature engineering and selection for model optimization

-

-

-

-For machine learning models to interpret and use any type of data, that data needs to be turned into features. And that is where feature engineering and selection comes into play. These are two critical steps in the data science workflow because they directly influence the performance and accuracy of the models. If you think about it, the better the model understands what data to focus on, the better it'll perform.

-

-These processes involve creating, selecting, and transforming raw data into useful features loaded with meaning that help represent the underlying problem for the model.

-

-For example, imagine building a model to predict house prices. Raw data might include information like the size of the house in square meters, the number of rooms, and the year it was built. Through feature engineering, a data scientist could create new features, such as "price per square meter" or "age of the house," which make the data more informative for the model. These features can highlight trends that a model might otherwise miss.

-

-Feature selection, on the other hand, focuses on optimizing the use and dependency on features by identifying the most relevant ones and removing the redundant or irrelevant features. For example, let's consider a retail scenario where a model is predicting customer churn, here it might benefit from focusing on features like "purchase frequency" and "customer feedback sentiment", while ignoring less impactful ones like "the time of day purchases are made". This helps to avoid the model getting overwhelmed by noise, improving both its efficiency and accuracy.

-

-If you're looking to improve your data science game, then focusing on feature engineering and selection can definitely have that effect.

-

-## 11\. Staying Current

-

-

-

-The data science field evolves at an unprecedented pace, driven by advancements in artificial intelligence, machine learning, and data technologies. Staying current with emerging trends is essential for maintaining a competitive edge and excelling in the industry.

-

-Joining **data science communities**, such as forums or online groups, provides a platform for exchanging ideas, discussing challenges, and learning from peers. For instance, platforms like Kaggle or GitHub allow aspiring data scientists to collaborate on data science projects and gain exposure to real-world applications.

-

-Attending **data science conferences** is another effective way to stay informed. Events like NeurIPS, Strata Data Conference, or PyData showcase cutting-edge research and practical case studies, offering insights into the latest advancements in machine learning models, big data technologies, and cloud computing tools.

-

-Engaging in **open-source projects** not only sharpens technical skills but also helps data professionals contribute to the broader data science community. For example, contributing to an open-source MLOps framework might provide invaluable experience in deploying and monitoring machine learning pipelines.

-

-Embracing trends like **MLOps** for operationalizing machine learning, **AutoML** for automating model selection, and **Generative AI** for creating synthetic data ensures that data scientists remain at the forefront of innovation. These emerging technologies are reshaping the data science field, making continuous learning a non-negotiable aspect of career growth.

-

-**Summary**

-

-Mastering these essential data scientist skills—from programming languages and machine learning skills to interpreting data insights and statistical models—will future-proof your [career path in data science](https://roadmap.sh/ai-data-scientist/career-path). These include the core skills of data manipulation, statistical analysis, and data visualization, all of which are central to the data science field.

-

-In addition, while big data technologies and data engineering skills are not the central focus of a data scientist's role, they serve as valuable, data science-adjacent competencies. Familiarity with big data tools like Apache Spark and cloud computing platforms can enhance scalability and efficiency in handling massive datasets, while data engineering knowledge helps create robust pipelines to support analysis. By building expertise in these areas and maintaining adaptability, you can excel in this dynamic, data-driven industry.

-

-Check out our [data science roadmap](https://roadmap.sh/ai-data-scientist) next to discover what your potential learning path could look like in this role.

-

diff --git a/src/data/guides/ai-data-scientist-tools.md b/src/data/guides/ai-data-scientist-tools.md

deleted file mode 100644

index 5c803b2e4..000000000

--- a/src/data/guides/ai-data-scientist-tools.md

+++ /dev/null

@@ -1,313 +0,0 @@

----

-title: 'Data Science Tools: Our Top 11 Recommendations for @currentYear@'

-description: 'Master your data science projects with our top 11 tools for 2025! Discover the best platforms for data analysis, visualization, and machine learning.'

-authorId: fernando

-excludedBySlug: '/ai-data-scientist/tools'

-seo:

- title: 'Data Science Tools: Our Top 11 Recommendations for @currentYear@'

- description: 'Master your data science projects with our top 11 tools for 2025! Discover the best platforms for data analysis, visualization, and machine learning.'

- ogImageUrl: 'https://assets.roadmap.sh/guest/data-science-tools-1a9w1.jpg'

-isNew: false

-type: 'textual'

-date: 2025-01-28

-sitemap:

- priority: 0.7

- changefreq: 'weekly'

-tags:

- - 'guide'

- - 'textual-guide'

- - 'guide-sitemap'

----

-

-

-

-In case you haven't noticed, the data science industry is constantly evolving, potentially even faster than the web industry (which says a lot\!).

-

-And 2025 is shaping up to be another transformative year for tools and technologies. Whether you're exploring machine learning tools, predictive modeling, data management, or data visualization tools, there's an incredible array of software to enable [data scientists](https://roadmap.sh/ai-data-scientist) to analyze data efficiently, manage data effectively, and communicate insights.

-

-In this article, we dive into the essential data science tools you need to know for 2025, complete with ratings and expert picks to help you navigate the options.

-

-## What is Data Science?

-

-Data science is an interdisciplinary field that combines mathematics, statistics, computer science, and domain expertise to extract meaningful insights from data. It involves collecting, cleaning, and analyzing large datasets to uncover patterns, trends, and actionable information. At its core, data science aims to solve complex problems through data-driven decision-making, using techniques such as machine learning, predictive modeling, and data visualization.

-

-The data science process typically involves:

-

-* **Data Collection**: Gathering data from various sources, such as databases, APIs, or real-time sensors.

-* **Data Preparation**: Cleaning and transforming raw data into a usable format for analysis.

-* **Exploratory Data Analysis (EDA):** Identifying trends, correlations, and outliers within the dataset.

-* **Modeling**: Using algorithms and statistical methods to make predictions or classify data.

-* **Interpretation and Communication**: Visualizing results and presenting insights to stakeholders in an understandable manner.

-

-Data science plays a key role in various industries, including healthcare, finance, marketing, and technology, driving innovation and efficiency by leveraging the power of data.

-

-## Criteria for Ratings

-

-We rated each of the best data science tools on a 5-star scale based on:

-

-* **Performance:** How efficiently the tool handles large and complex datasets. This includes speed, resource optimization, and reliability during computation.

-* **Scalability:** The ability to scale across big data and multiple datasets. Tools were evaluated on their capability to maintain performance as data sizes grow.

-* **Community and Ecosystem:** Availability of resources, support, and integrations. Tools with strong community support and extensive libraries received higher ratings.

-* **Learning Curve:** Ease of adoption for new and experienced users. Tools with clear documentation and intuitive interfaces were rated more favorably.

-

-## Expert Recommendations

-

-Picking the best tools for your project is never easy, and it's hard to make an objective decision if you don't have experience with any of them.

-

-So to make your life a bit easier, here's my personal recommendation, you can take it or leave it, it's up to you, but at least you'll know where to start:

-

-While each tool has its strengths, my favorite pick among them is **TensorFlow**. Its perfect scores in performance, scalability, and community support (you'll see them in a second in the table below), combined with its relatively moderate learning curve, make it an amazing choice for building advanced neural networks and developing predictive analytics systems. You can do so much with it, like image recognition, natural language processing, and recommendation systems cementing its position as the leading choice (and my personal choice) in 2025\.

-

-Now, to help you understand and compare the rest of the tools from this guide, the table below summarizes their grades across key criteria: performance, scalability, community support, and learning curve. It also highlights the primary use cases for these tools.

-

-| Tool | Performance | Scalability | Community | Learning Curve | Best For |

-| ----- | ----- | ----- | ----- | ----- | ----- |

-| TensorFlow | 5 | 5 | 5 | 4 | Advanced neural networks, predictive analytics |

-| Apache Spark | 5 | 5 | 5 | 3 | Distributed analytics, real-time streaming |

-| Jupyter Notebooks | 4 | 4 | 5 | 5 | Exploratory analysis, education |

-| Julia | 4 | 4 | 4 | 3 | Simulations, statistical modeling |

-| NumPy | 4 | 3 | 5 | 5 | Numerical arrays, preprocessing workflows |

-| Polars | 5 | 4 | 4 | 4 | Data preprocessing, ETL acceleration |

-| Apache Arrow | 5 | 5 | 4 | 3 | Interoperability, streaming analytics |

-| Streamlit | 4 | 4 | 5 | 5 | Interactive dashboards, rapid deployment |

-| DuckDB | 4 | 4 | 4 | 5 | SQL queries, lightweight warehousing |

-| dbt | 4 | 4 | 5 | 4 | SQL transformations, pipeline automation |

-| Matplotlib | 4 | 3 | 5 | 4 | Advanced visualizations, publication graphics |

-

-Let's now deep dive into each of these tools to understand in more detail, why they're in this guide.

-

-## Data science tools for ML & Deep learning tools

-

-### TensorFlow

-

-

-

-TensorFlow remains one of the top data science tools for deep learning models and machine learning applications. Developed by Google, this open-source platform excels in building neural networks, predictive analytics, and natural language processing models.

-

-* **Performance (★★★★★):** TensorFlow achieves top marks here due to its use of GPU and TPU acceleration, which allows seamless handling of extremely large models. Its ability to train complex networks without compromising on speed solidifies its high-performance ranking.

-* **Scalability (★★★★★):** TensorFlow scales from single devices to distributed systems effortlessly, enabling use in both prototyping and full-scale production.

-* **Community and Ecosystem (★★★★★):** With an active developer community and comprehensive support, TensorFlow offers unmatched resources and third-party integrations.

-* **Learning Curve (★★★★):** While it offers immense power, mastering TensorFlow's advanced features requires time, making it slightly less accessible for beginners compared to simpler frameworks.

-

-**Strengths:** TensorFlow is a powerhouse for performance and scalability in the world of machine learning. Its GPU and TPU acceleration allow users to train and deploy complex models faster than many competitors. The massive community ensures constant innovation, with frequent updates, robust third-party integrations, and an ever-growing library of resources. The inclusion of TensorFlow Lite and TensorFlow.js makes it versatile for both edge computing and web applications.

-

-**Best For:** Developing advanced neural networks for image recognition, natural language processing pipelines, building recommendation systems, and creating robust predictive analytics tools for a wide array of industries.

-

-**Used by:** Google itself uses TensorFlow extensively for tasks like search algorithms, image recognition, and natural language processing. Similarly, Amazon employs TensorFlow to power recommendation systems and optimize demand forecasting.

-

-## Data science tools for big data processing

-

-### Apache Spark

-

-

-

-An Apache Software Foundation project, Apache Spark is a powerhouse for big data processing, enabling data scientists to perform batch processing and streaming data analysis. It supports a wide range of programming languages, including Python, Scala, and Java, and integrates well with other big data tools like Hadoop and Kafka.

-

-* **Performance (★★★★★):** Spark excels in processing speed thanks to its in-memory computing capabilities, making it a leader in real-time and batch data processing.

-* **Scalability (★★★★★):** Designed for distributed systems, Spark handles petabytes of data with ease, maintaining efficiency across clusters.

-* **Community and Ecosystem (★★★★★):** Spark's widespread adoption and integration with tools like Kafka and Hadoop make it a staple for big data workflows.

-* **Learning Curve (★★★):** Beginners may find distributed computing concepts challenging, though excellent documentation helps mitigate this.

-

-**Strengths:** Spark stands out for its lightning-fast processing speed and flexibility. Its in-memory computation ensures minimal delays during large-scale batch or streaming tasks. The compatibility with multiple programming languages and big data tools enhances its integration into diverse tech stacks.

-

-**Best For:** Executing large-scale data analytics in distributed systems, real-time stream processing for IoT applications, running ETL pipelines, and data mining for insights in industries like finance and healthcare.

-

-**Used by:** Apache Spark has been adopted by companies like Uber and Shopify. Uber uses Spark for real-time analytics and stream processing, enabling efficient ride-sharing logistics. Shopify relies on Spark to process large volumes of e-commerce data, supporting advanced analytics and business intelligence workflows.

-

-## Exploratory & Collaborative tools

-

-### Jupyter Notebooks

-

-

-

-Jupyter Notebooks are an essential data science tool for creating interactive and shareable documents that combine code, visualizations, and narrative text. With support for over 40 programming languages, including Python, R, and Julia, Jupyter facilitates collaboration and exploratory data analysis.

-

-* **Performance (★★★★):** Jupyter is designed for interactivity rather than computational intensity, which makes it highly effective for small to medium-scale projects but less suitable for high-performance tasks.

-* **Scalability (★★★★):** While Jupyter itself isn't designed for massive datasets, its compatibility with scalable backends like Apache Spark ensures it remains relevant for larger projects.

-* **Community and Ecosystem (★★★★★):** Jupyter's open-source nature and extensive community-driven extensions make it a powerhouse for versatility and support.

-* **Learning Curve (★★★★★):** Its simple and intuitive interface makes it one of the most accessible tools for beginners and professionals alike

-

-**Strengths:** Jupyter's flexibility and ease of use make it indispensable for exploratory analysis and education. Its ability to integrate code, output, and explanatory text in a single interface fosters collaboration and transparency.

-

-**Best For:** Creating educational tutorials, performing exploratory data analysis, prototyping machine learning models, and sharing reports that integrate code with rich visualizations.

-